With recent developments in Embodied Artificial Intelligence (EAI) research, there has been a growing demand for high-quality, large-scale interactive scene generation. While prior methods in scene synthesis have prioritized the naturalness and realism of the generated scenes, the physical plausibility and interactivity of scenes have been primarily left untouched. To address this disparity, we introduce PhyScene, a novel method dedicated to generating interactive 3D scenes characterized by realistic layouts, articulated objects, and rich physical interactivity tailored for embodied agents. Based on a conditional diffusion model for capturing scene layouts, we devise novel physics- and interactivity-based guidance mechanisms that integrate constraints from object collision, room layout, and object reachability. Through extensive experiments, we demonstrate that PhyScene effectively leverages these guidance functions for physically interactable scene synthesis, outperforming existing state-of-the-art scene synthesis methods by a large margin. Our findings suggest that the scenes generated by PhyScene hold considerable potential for facilitating diverse skill acquisition among agents within interactive environments, thereby catalyzing further advancements in embodied AI research.

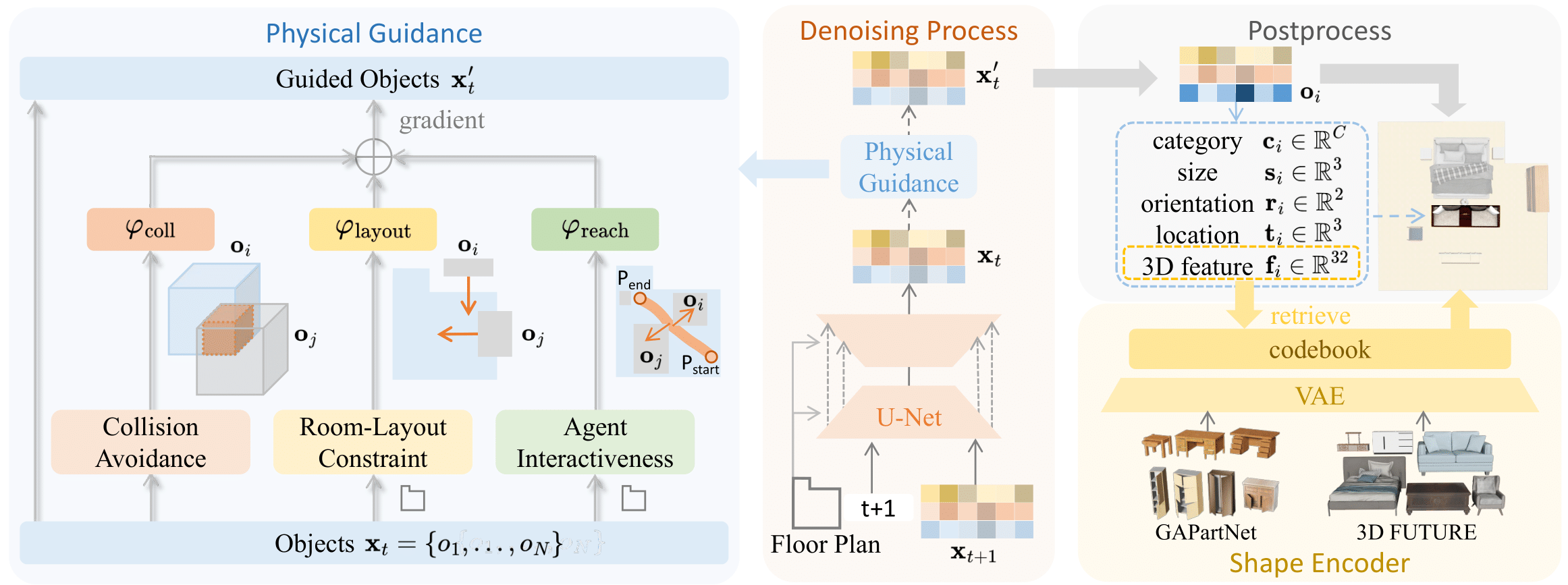

Physically interactable scene synthesis requires realistic layout, articulated objects, and physical interactivity. We first leverage diffusion models with a floor plan condition for capturing scene layout distributions. Then we apply three distinct physical guidnce dunctions for guiding the diffusion process to improve the physical plausibility and interactivity of generated scenes. Finally, we use the 3D feature for cross-dataset retrieval with both articulated objects and rigid objects to translate the layout into a physical interactable scene.

Here we retrieve articulated objects from GAPartNet Dataset and simulate generated scenes in Omniverse Isaac Sim.

We utilize floor plan & room structure from ProcTHOR and generate scenes with unseen floor plans.

Robot interact with articulated objects in generated scenes using motion planning.

Visualization comparison of PhyScene with ATISS and DiffuScene.

Results of three distinct guidances with floor-plan condition.

Comparison of PhyScene synthesis without and with guidance.

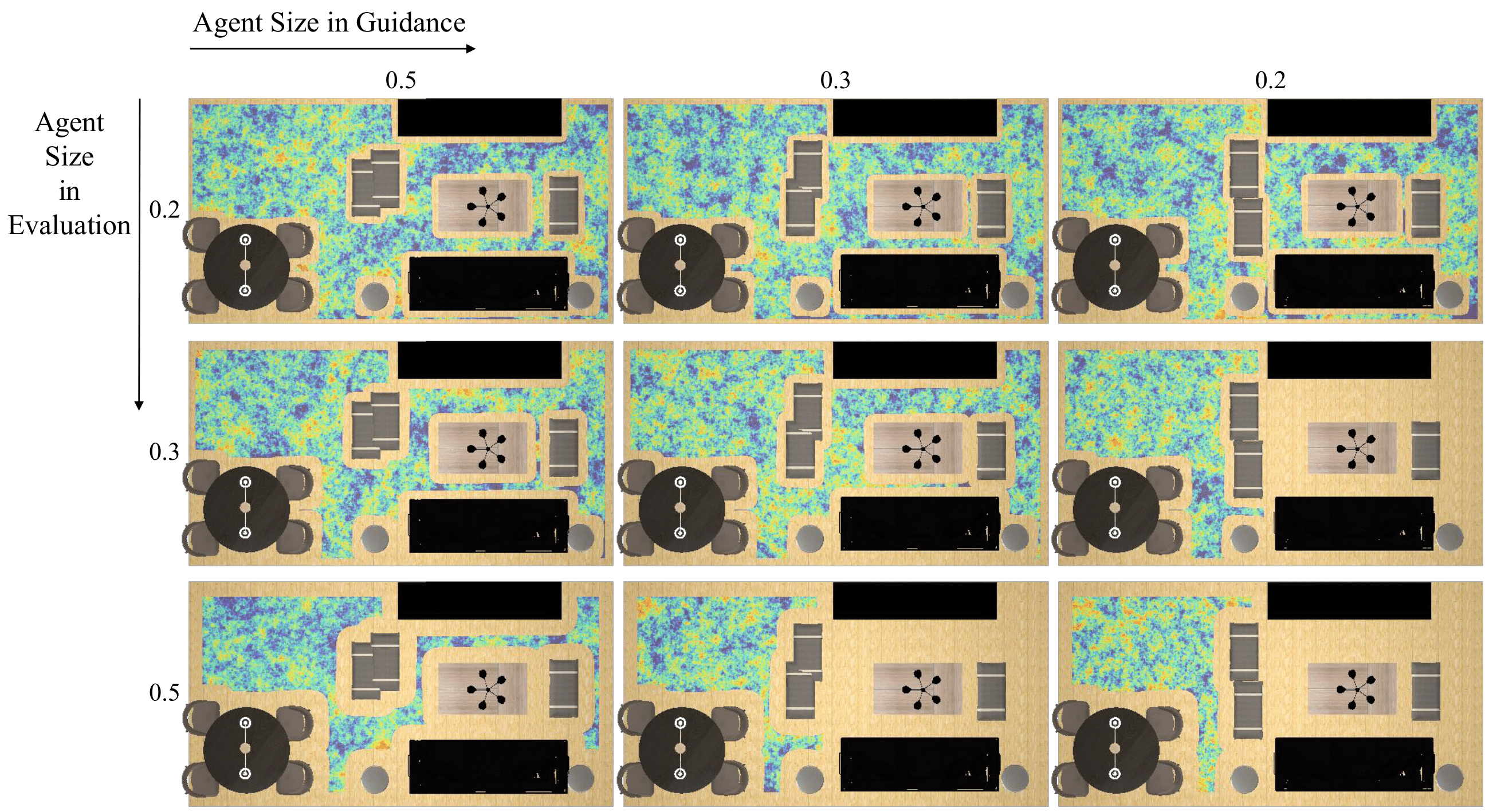

Comparison of different agent size in reachability guidance. Guidance on a smaller size is not suitable for the agent with a larger size in evaluation.

@inproceedings{yang2024physcene,

title={PhyScene: Physically Interactable 3D Scene Synthesis for Embodied AI},

author={Yang, Yandan and Jia, Baoxiong and Zhi, Peiyuan and Huang, Siyuan},

booktitle={Proceedings of Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024}

}